Building For Voice Control

This post is brought to you by Emerge Tools, the best way to build on mobile.

Voice Control is a groundbreaking accessibility technology that Cupertino & Friends™️ have made available on iOS devices. It made its debut with iOS 13, and it allows you to do…well, basically everything on your iPhone using only your voice.

For real - try it right now! Hold up your device and simply ask Siri to turn it on, “Hey Siri, turn on Voice Control.”

let concatenatedThoughts = """

If it's your first time using Voice Control, iOS might have to download a one time configuration file before it's ready to use. Voice Control is also available on macOS, where it may need to perform the same setup.

"""

Once it’s activated, you pretty much say “verb-noun” actions to navigate and use iOS. For example, “Open App Switcher” or “Go Home.” If you’re unsure of what you can do, you can even say “Show Commands” or “Show me what to say” for some hints.

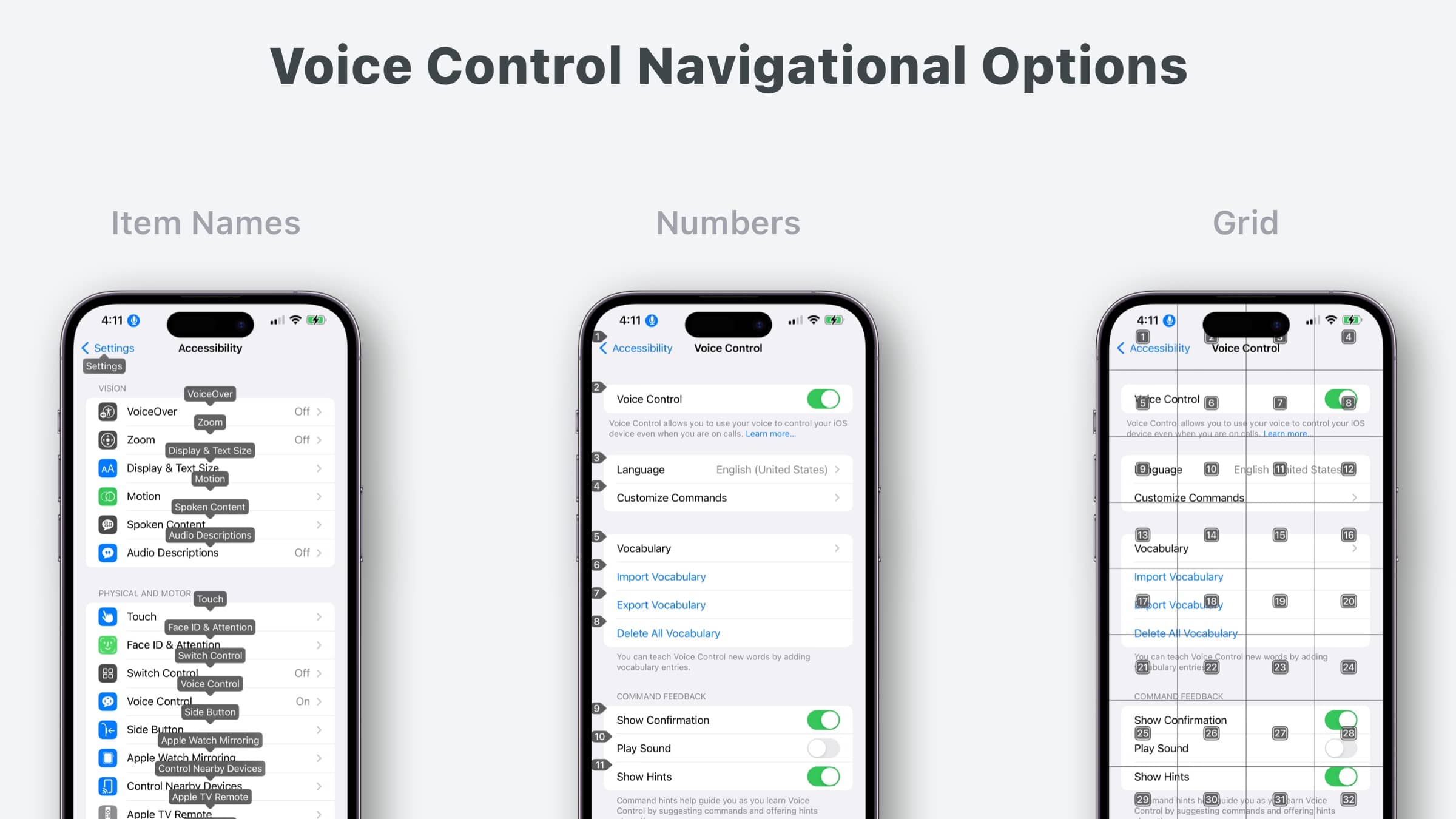

Voice Control has three primary display modes. For anything that is opted into the accessibility engine, you can see:

- Item Names: This maps to the element’s

accessibilityLabelvalue. - Numbers: Instead of names, this option simply numbers each option.

- Grid: Finally, this route splits out the interface into grid portions, allowing you to focus on an area and drill down into it.

Supercharge AX Testing

One of the things developers may not realize is that in addition to opening up an iPhone to folks who may have motor disabilities, Voice Control is also an incredible cheat code for iOS engineers, too.

Why? Because you can see all of your interface’s accessibility label values instantly!

This makes it trivial to see where you may have missed something. If you’re unfamiliar with accessibility programming on iOS, the accessibility label value is one of, if not the most, important properties to know about.

The item names option is the default mode, so you’ll be able to take stock of things quickly. These days, this is my ideal way to test out things for Voice Over. If you find that your accessibility label isn’t quite right for Voice Control, there’s API to change it too:

// In UIKit

open var accessibilityUserInputLabels: [String]!

// In SwiftUI

.accessibilityInputLabels([Text])

This array of strings can help Voice Control respond to whatever you use in there. Perhaps most importantly, that first string in the array will supersede any accessibility label value for display purposes.

Consider the ever-present cog icon for settings. If you had one for a video editing app, it might look like this:

struct SettingsButton: View {

var body: some View {

Button {

openAudioSettings()

} label: {

Image(systemName: "gear.circle")

}

.accessibilityLabel("Audio Levels and Mixing Settings")

}

}

But, saying “Open audio levels and mixing settings” might be a bit much. Not to mention, it’ll crowd screen real estate with Voice Control. So, this is where you may turn to accessibilityInputLabels to do two things:

- Shorten what someone needs to say.

- Give it other words that Voice Control can respond to.

Given this information, maybe you’d tack on something like this:

struct SettingsButton: View {

private let axVoiceControlCommands: [Text] = [

Text("Audio Settings"),

Text("Settings"),

Text("Audio Levels"),

Text("Mixing Settings")

]

var body: some View {

Button {

openAudioSettings()

} label: {

Image(systemName: "gear.circle")

}

.accessibilityLabel("Audio Levels and Mixing Settings")

.accessibilityInputLabels(axVoiceControlCommands)

}

}

Now, someone could say any of those things - whatever is most obvious and intuitive to them. Commands like “Tap Audio Levels” or “Open Settings” would work with Voice Control.

Challenges

Recently at Buffer, I’ve been improving our Voice Control experience. It mostly just worked, as well it should - because again, that means we’re vending accessibility labels where we should be. That said, I did hit a few bumps in the road.

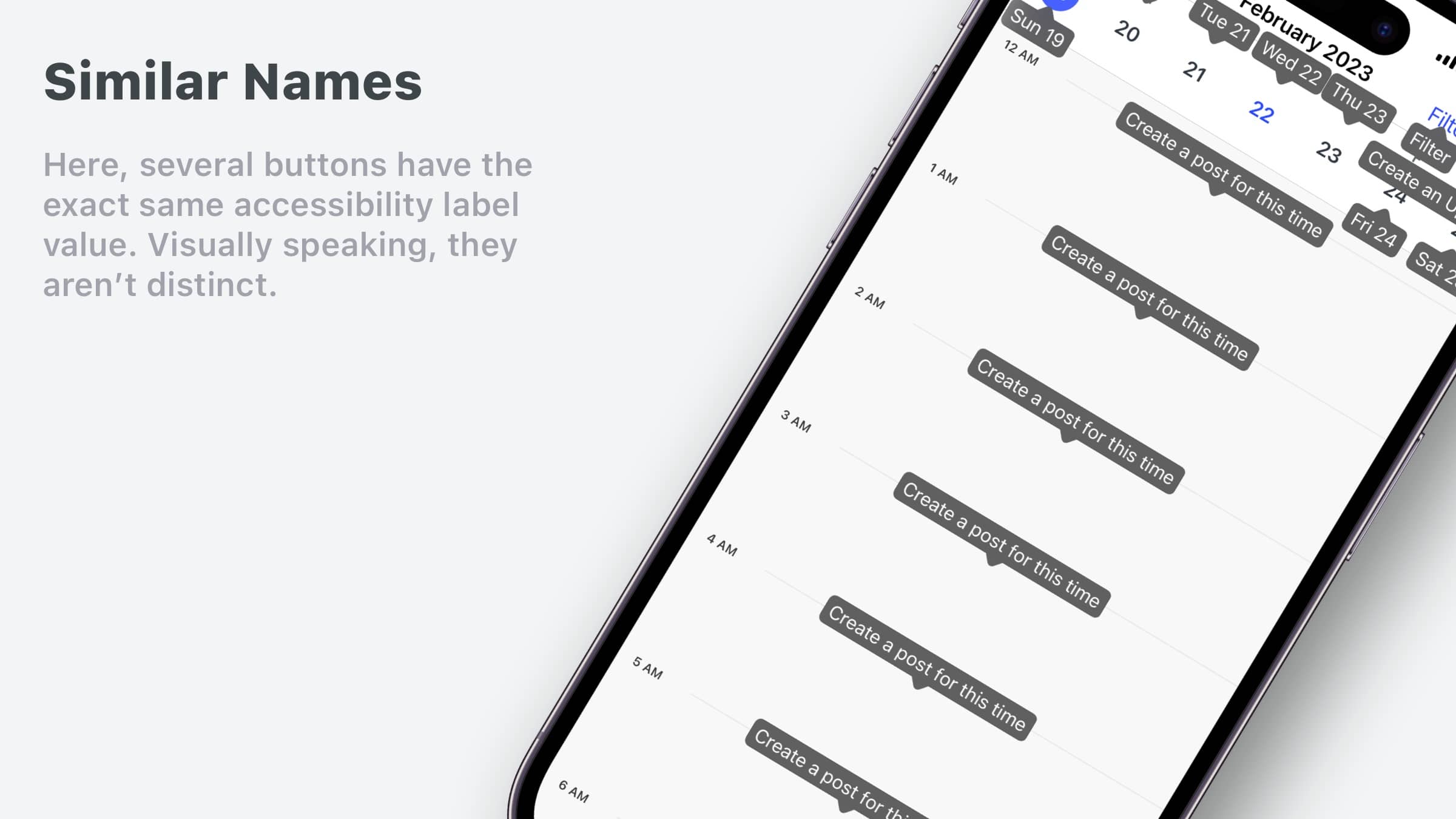

Similar Item Names

Here’s an interesting one - I had a few places where I had identical accessibility label values. Here, “Create a post for this time” shows over and over:

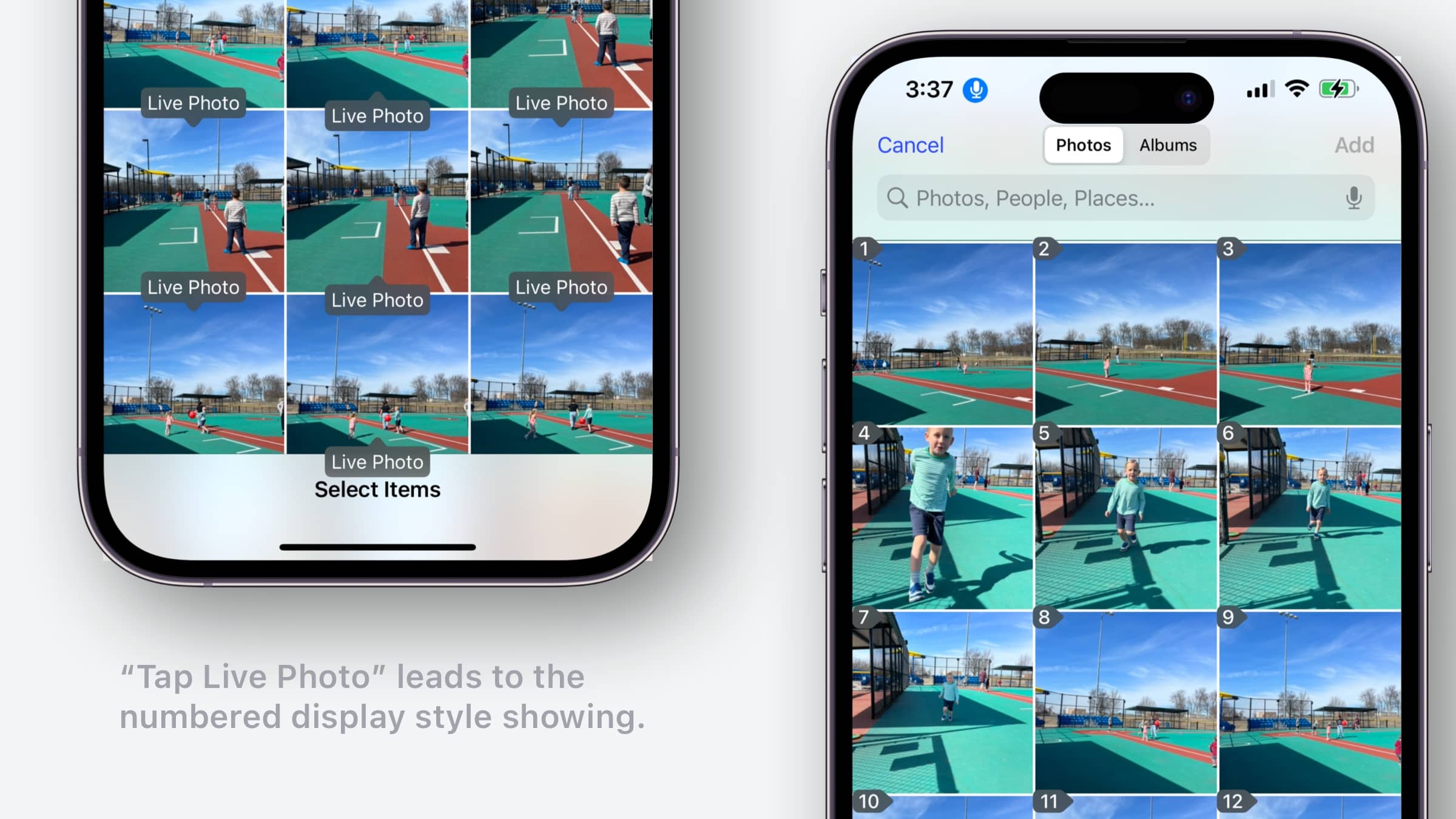

I started to wonder if this was an issue. It turns out Photos has the same “problem”, and I wondered how they handled it. It turns out that the answer is…Voice Control solves it.

When you say something that displays multiple times (below, “Tap Live Photo”) - iOS will disambiguate things by hot swapping the display style to numbers:

Clever.

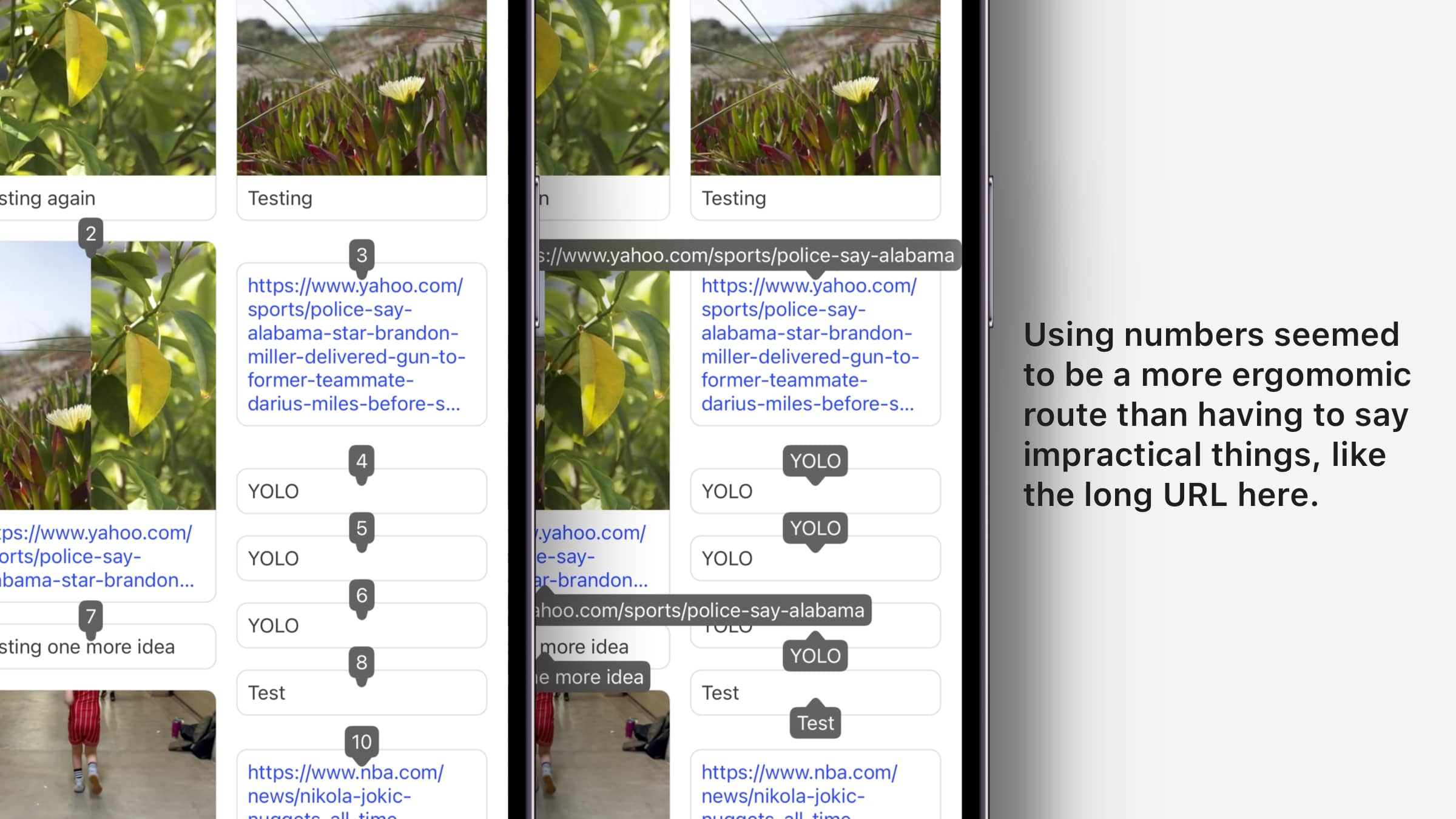

Long, Long Names

Further still, there are places where I didn’t have an obvious string to use to interact with the interface. In our new Ideas experience in Buffer, an item in the grid could have just about anything in it. Think of them as social media posts. In this case, the text of their idea didn’t feel very friendly to speak out loud - and this was doubly (triply!) so if the text was a URL.

In this case, I decided to use a numbered system. It’s enough to make things unique, and it also makes it obvious and easy to open things. The right side image is what showed by default, and the left side is the direction I ended up going:

Sidebar: Gotta love testing data. Yolo!

No API to Detect Voice Control

And finally, this was the sticker for me. Unlike…literally every single accessibility API on iOS…there is no way to know when Voice Control is in use! This proved problematic for places where we had code like this:

func tableView(_ tableView: UITableView, didSelectRowAt indexPath: IndexPath) {

if indexPath.row == TheOneWithTheSwitchInIt {

if UIAccessibility.isVoiceOverRunning {

// Perform some action that toggling

// The UISwich would normally do

}

}

}

Why would we have code like this? Well, for some table cells - there are UISwitch controls in them. It’s a standard U.X. pattern seen all over iOS. Our implementation reasons that people will not tap the cell to interact with them, but rather interact with the switch.

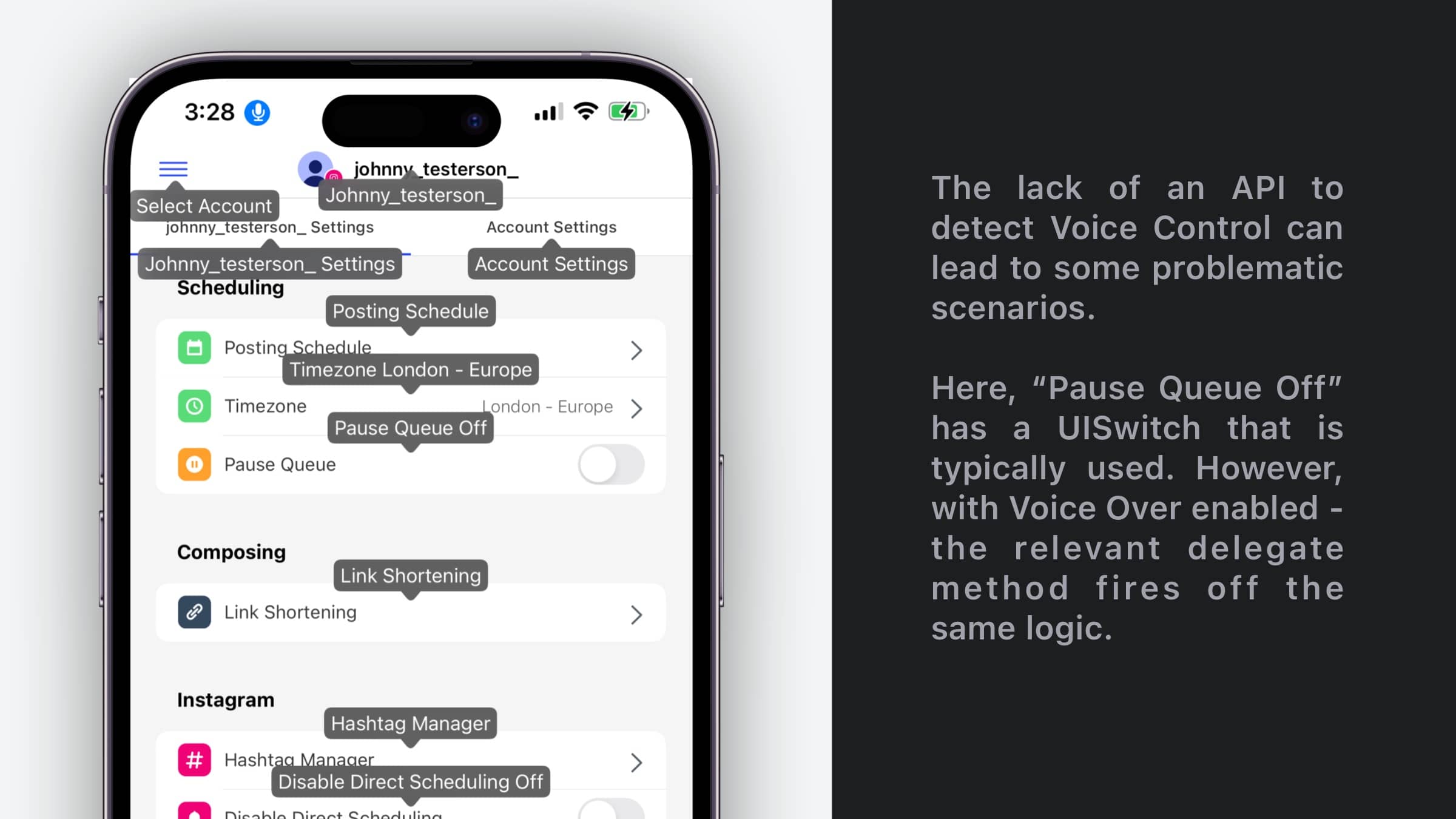

However, with Voice Over, that’s exactly what we want. The problem is that Voice Control won’t work with this setup. Look specially at the “Pause Queue Off” cell:

So if the user says “Tap Pause Queue Off”, the logic above fires - but the check for Voice Over means that nothing happens. It’s important to note here that Voice Over and Voice Control are mutually exclusive, you can only be using one or the other.

If there were simply API to check for Voice Control’s state, this wouldn’t be an issue. I wish Apple had something like this:

UIAccessibility.isVoiceControlRunning

But alas, it does not - making issues like these harder to solve than they should be.

Final Thoughts

Voice Control is crazy cool - it’s one of those technologies that Apple introduced and I immediately thought, “Wow, they are ahead of everyone else.” But there are some gotchas, it still baffles me that you’re unable to detect if it’s running at the API level. But, as they say in Cupertino, I guess I’ll file a radar.

However, it’s one of the single best ways to test your own Voice Over implementations. And, most importantly, it opens up iOS to many more people who might not otherwise be able to use their iPhones to their fullest potential. That can’t thought of as anything other than a massive win.

Until next time ✌️